How artificial intelligence can improve human moral judgments

Ethicists usually appeal to their own intuitions with little evidence that their intuitions are reliable or shared by others. Unfortunately, our human moral intuitions are often mistaken when we forget relevant facts, become confused by a multitude of complex facts, or are misled by framing, emotion, or bias.

Fortunately, these sources of error can be avoided by properly programmed artificial intelligence. With enough data and machine learning, AI can predict which moral judgments human individuals and groups would make if they were not misled by their human limitations. We will discuss how our group is building an AI to accomplish this goal for kidney exchanges and how this AI could be used to improve human moral judgments in many other areas of ethics, including automated vehicles and weapons.

Walter Sinnott-Armstrong is Chauncey Stillman Professor of Practical Ethics at Duke University in the Philosophy Department, the Kenan Institute for Ethics, the Duke Institute for Brain Science, and the Law School. He publishes widely in ethics, moral psychology and neuroscience, philosophy of law, epistemology, philosophy of religion, and argument analysis.

This is the first in a series of public lectures convened by the Humanising Machine Intelligence Grand Challenge. Read more about the project at hmi.anu.edu.au.

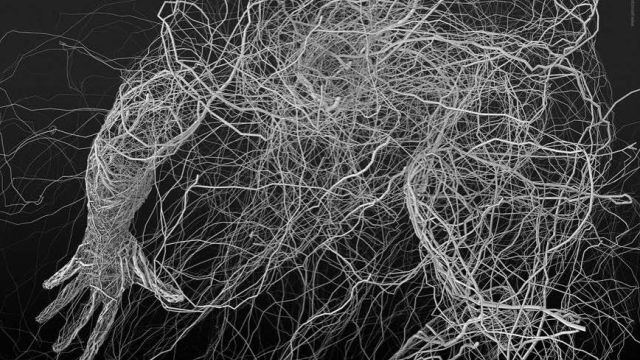

Artwork CC4.0 licence from by Janusz Jurek: www.januszjurek.info

Location

Cinema, Cultural Centre

Speaker

- Professor Walter Sinnott-Armstrong